Radar and Lidar: Complementing Visual Data

Radar's Role in Enhancing Perception

Radar, or radio detection and ranging, plays a crucial role in autonomous vehicle perception by providing information about the environment that is largely independent of lighting conditions. Unlike cameras, radar can see through fog, rain, and even darkness. This crucial capability makes radar an essential component of a robust sensor suite for autonomous vehicles, enabling them to perceive the surrounding environment accurately and reliably in a variety of weather conditions. Radar's ability to detect objects at a distance and measure their speed makes it invaluable for accurate object tracking and prediction.

Radar's strength lies in its ability to provide precise measurements of the distance, velocity, and acceleration of objects. This detailed information allows for more accurate predictions of object behavior, crucial for safe and effective autonomous driving maneuvers.

Lidar's Precision in 3D Mapping

Lidar, or light detection and ranging, provides high-resolution 3D point clouds of the environment. This detailed 3D representation is critical for creating accurate maps and understanding the spatial relationships between objects. Lidar's ability to measure the precise distance to objects allows for accurate modeling of their shape and size, which is essential for safe navigation.

The high-resolution 3D data captured by lidar is instrumental in creating detailed maps of the environment, enabling autonomous vehicles to understand their surroundings with a high degree of precision. This precise data is vital for tasks like path planning, obstacle avoidance, and object recognition.

Complementing Camera Data for Robustness

Cameras provide valuable visual data, but their performance can be significantly degraded by adverse weather conditions, such as heavy rain or snow. Radar and lidar, in contrast, can operate reliably in these conditions, providing a crucial backup system. By combining the strengths of all three sensors, autonomous vehicles can build a more comprehensive and robust understanding of their surroundings, leading to improved safety and reliability.

Fusion of Sensor Data: Improving Accuracy and Reliability

Sensor fusion, the process of combining data from multiple sensors, is essential for creating a comprehensive and reliable understanding of the environment. By integrating radar, lidar, and camera data, autonomous vehicles can improve the accuracy and reliability of their perception system. Combining different sensor modalities allows for the detection of objects that might be missed by a single sensor, and reduces the impact of sensor limitations.

Overcoming Limitations of Individual Sensors

Each sensor has its limitations. Cameras struggle in low-light conditions; lidar can be expensive and susceptible to interference from certain materials; and radar can sometimes have difficulty with the precise categorization of objects. The integration of these sensors allows for a more comprehensive perception system that mitigates these individual limitations.

Real-World Applications and Future Trends

The fusion of radar, lidar, and camera data is rapidly transforming autonomous driving. Applications extend beyond vehicles to include robots, drones, and other autonomous systems. Future trends focus on developing more sophisticated fusion algorithms that can process data from multiple sensors simultaneously to enhance decision-making and object recognition capabilities.

Challenges in Sensor Fusion Implementation

While the advantages of sensor fusion are clear, practical implementation presents significant challenges. Synchronizing data from multiple sensors, merging data from different formats, and developing robust algorithms for interpreting the combined data are all crucial hurdles that need to be overcome. The development of efficient and accurate fusion algorithms is critical for the reliable operation of autonomous systems in complex environments.

Real-World Applications and Future Directions

Real-World Applications of Emerging Technologies

Emerging technologies like artificial intelligence (AI) and machine learning (ML) are transforming numerous industries, from healthcare to finance. AI-powered diagnostic tools are proving increasingly accurate and efficient in identifying diseases, potentially saving countless lives and reducing healthcare costs. These tools can analyze vast amounts of patient data, spotting patterns and anomalies that might be missed by human clinicians. Moreover, AI is revolutionizing personalized medicine, enabling the development of treatments tailored to individual genetic profiles and health conditions.

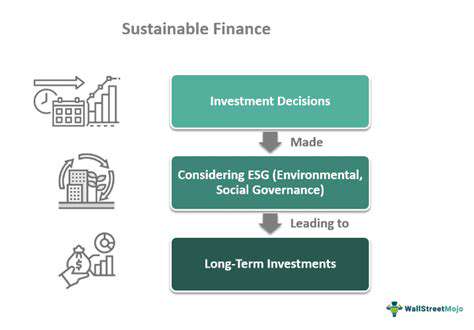

In the financial sector, AI algorithms are being utilized for fraud detection and risk assessment. These sophisticated systems can identify and flag suspicious transactions with remarkable speed and accuracy, significantly reducing financial losses. Furthermore, AI-driven chatbots are enhancing customer service, providing instant support and resolving queries quickly and efficiently. These applications demonstrate the pervasive impact of emerging technologies on various aspects of modern life.

Future Possibilities and Challenges

The future of emerging technologies holds immense promise. Imagine a world where personalized education adapts to each student's learning style and pace, fostering a more engaging and effective educational experience. This vision could become a reality through the utilization of AI and machine learning in educational platforms. This could lead to more equitable access to quality education worldwide. Furthermore, advancements in robotics and automation could transform industries by automating repetitive tasks and freeing up human workers for more creative and strategic endeavors.

However, the integration of these technologies also presents various challenges. Ethical considerations surrounding data privacy and algorithmic bias are paramount. Ensuring fairness and transparency in AI systems is crucial to avoid perpetuating existing societal inequalities. Additionally, the potential displacement of workers by automation necessitates proactive measures to support workforce transition and reskilling initiatives. Addressing these challenges head-on is vital for harnessing the full potential of emerging technologies while mitigating their risks.

Ethical Considerations and Societal Impact

The widespread adoption of emerging technologies necessitates careful consideration of their ethical implications. Questions surrounding data privacy, algorithmic bias, and the potential for misuse are crucial to address. Robust regulations and ethical guidelines are necessary to ensure that these technologies are used responsibly and beneficially. Furthermore, the societal impact of automation and job displacement requires careful planning and proactive measures to support workers and communities affected by these changes. It's imperative to foster a culture of responsible innovation that prioritizes human well-being and societal progress.

The integration of emerging technologies into various aspects of our lives raises crucial questions about the future of work, education, and society as a whole. Understanding these implications and proactively addressing the associated challenges is essential to harnessing the potential benefits while mitigating the risks.