In Central American rainforests, certain ant species defend acacia trees from hungry herbivores. This protective service comes at a price - the trees must dedicate precious resources to housing and feeding their six-legged bodyguards. Such arrangements demonstrate nature's ability to create win-win scenarios through millions of years of co-evolution.

Below ground, fungal networks weave through soil, connecting plant roots in what scientists call the wood wide web. These mycorrhizal networks allow trees to share nutrients and even communicate danger signals. This remarkable underground cooperation helps entire forests function as single, interconnected organisms rather than collections of individual trees.

These biological partnerships teach us valuable lessons about cooperation. They demonstrate how specialization and mutual dependence can create systems more resilient than any individual component. As we develop autonomous technologies, understanding these natural models of collaboration becomes increasingly relevant.

Designing Intuitive Human-Machine Partnerships for Vehicle Safety

Crafting Natural Interaction Models

Creating effective human-machine interfaces requires balancing technological capabilities with human psychology. Designers must understand how people naturally process information and make decisions in high-pressure situations. The best interfaces feel intuitive because they align with our existing mental models rather than forcing users to adapt to machine logic.

Building Fault-Tolerant Systems

Even the most carefully designed systems must account for human error. Effective interfaces incorporate multiple layers of protection, from clear visual warnings to progressive disclosure of complex functions. When mistakes occur, the system should provide constructive guidance rather than cryptic error codes.

Consider how modern aircraft cockpits handle emergencies: warnings prioritize critical information while suppressing less urgent alerts. This hierarchical approach prevents information overload during crises. Such designs don't just prevent errors - they create environments where humans and machines complement each other's strengths.

The Language of Visual Communication

Effective interfaces speak to users through carefully designed visual language. Color, shape, and motion all convey meaning instantly, bypassing the need for conscious processing. A well-designed dashboard uses consistent visual grammar - red always means stop, flashing indicates urgency, and green confirms successful actions.

These visual cues create rapid feedback loops, letting operators know immediately whether their commands were understood. When designing for autonomous vehicles, this instant communication becomes crucial for maintaining trust between human operators and machine intelligence.

Training for Symbiotic Operation

No interface exists in isolation - effective use requires proper training that emphasizes the collaborative nature of human-machine systems. Training programs should focus on developing intuitive understanding rather than rote memorization of commands.

Simulators that replicate real-world scenarios help operators develop the situational awareness needed to work effectively with autonomous systems. This training transforms users from passive observers into active partners in the control loop.

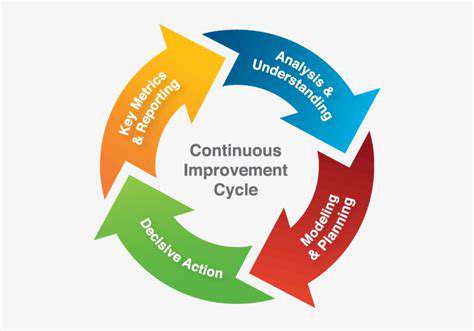

Designing for Evolutionary Improvement

As autonomous systems grow more sophisticated, their interfaces must evolve in parallel. Modular designs allow for gradual improvements without requiring complete overhauls. This evolutionary approach ensures continuity while accommodating technological advancements.

Navigating the Moral Labyrinth of Autonomous Decision-Making

The Complexity of Machine Ethics

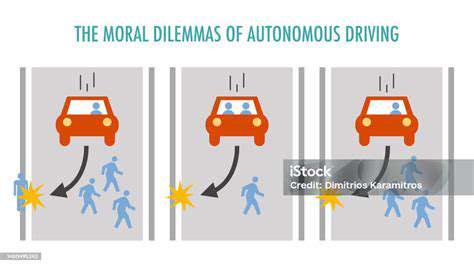

Programming ethics into autonomous systems presents unprecedented challenges. Unlike biological organisms that evolved moral instincts over eons, machines must derive their ethical frameworks from explicit programming. This requires translating nuanced human values into precise algorithmic rules - a task that philosophers have debated for centuries.

Principles of Machine Moral Reasoning

Effective ethical frameworks for autonomous systems must balance multiple philosophical approaches. Utilitarian calculations might dictate minimizing total harm, while deontological principles would prohibit certain actions regardless of consequences. The true challenge lies in creating systems that can navigate these competing ethical theories in real-world scenarios.

Transparency in Algorithmic Decisions

When autonomous systems make life-altering decisions, understanding their reasoning becomes crucial. Black box algorithms that provide no explanation for their choices erode public trust. Developers must create systems capable of explaining their decision-making processes in terms humans can understand and evaluate.

This transparency serves two vital functions: it allows for human oversight of machine decisions, and it creates opportunities for continuous ethical improvement. Like a judge explaining a legal ruling, autonomous systems should be able to articulate why they chose one course of action over another.

Cultural Context in Ethical Programming

Ethical norms vary dramatically across cultures - actions considered proper in one society may be taboo in another. Autonomous systems operating in global contexts must navigate these differences sensitively. This might involve creating adaptable ethical frameworks that can adjust to local norms while maintaining fundamental human rights standards.

The Learning Curve of Machine Morality

Just as humans develop moral reasoning through experience, autonomous systems may need similar learning mechanisms. Rather than relying solely on pre-programmed rules, future systems might incorporate feedback loops that allow ethical algorithms to evolve based on real-world outcomes and human guidance.

This approach mirrors how children develop moral understanding - through experience, reflection, and social feedback. Creating machines that can learn ethics presents both enormous potential and significant risks that demand careful consideration.

Accountability in Autonomous Systems

When autonomous systems make decisions with moral consequences, determining accountability becomes essential. Clear frameworks must establish whether responsibility lies with programmers, operators, manufacturers, or the algorithms themselves. This legal and ethical groundwork must evolve alongside the technology to ensure proper oversight and recourse.