Autonomous Vehicles and the Moral Calculus

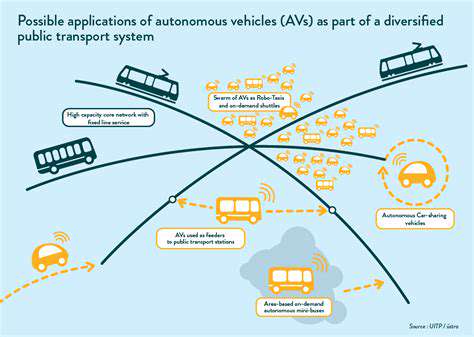

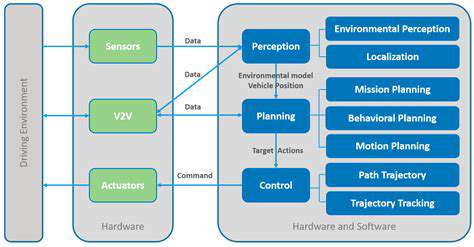

As self-driving cars become more prevalent, we're forced to confront an uncomfortable question: how should machines handle morally ambiguous situations where human lives hang in the balance? Unlike theoretical thought experiments, these dilemmas occur in messy reality - with unpredictable pedestrians, sudden obstacles, and split-second decisions. The algorithms governing these vehicles must balance competing priorities while respecting diverse societal values.

While philosophers have pondered trolley problems for decades, real-world driving scenarios present far greater complexity. Autonomous systems must process multiple moving variables simultaneously: vehicle speeds, pedestrian behavior, road conditions, and more. This demands ethical frameworks that extend beyond simple either/or choices to consider nuanced probabilities and consequences.

The Importance of Prioritizing Vulnerable Road Users

Pedestrians and cyclists face disproportionate risk in traffic accidents - a reality that must inform autonomous vehicle programming. When collisions become unavoidable, should the system prioritize a pedestrian's life over a passenger's safety? This difficult calculus involves legal, ethical, and social dimensions that resist simple solutions.

Programming such decisions requires extraordinary care. While factors like age or health status might seem relevant, introducing these variables risks creating biased algorithms that discriminate against certain groups. Engineers must develop weighting systems that protect the vulnerable without introducing new forms of injustice.

The Dilemma of Algorithmic Decision-Making

Translating human ethics into machine code presents unprecedented challenges. Whose moral framework should guide these life-and-death decisions? Different cultures and individuals hold varying views about what constitutes ethical behavior in crisis situations. Developing consensus around these questions will shape the future of transportation.

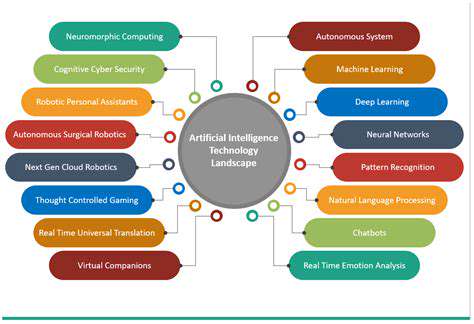

Algorithmic bias represents another critical concern. Since machine learning systems train on historical data, they may unintentionally perpetuate societal prejudices unless carefully audited. Regular reviews and bias mitigation strategies must become standard practice in autonomous vehicle development.

Societal Impact and Public Discourse

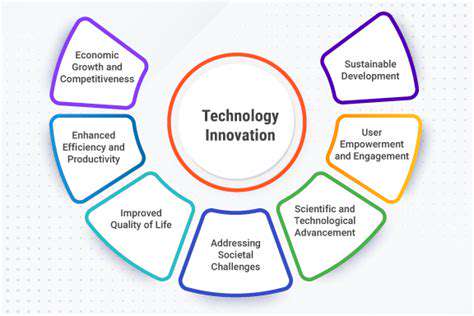

The implications of autonomous vehicle ethics extend far beyond traffic accidents. These systems will reshape our legal systems, social norms, and concepts of responsibility. Should manufacturers bear liability for algorithmic decisions? How will insurance models adapt? These questions demand input from diverse stakeholders - not just technologists.

Public engagement remains crucial for responsible development. Transparent discussions about capabilities and limitations can build trust while addressing legitimate concerns about job displacement, accessibility, and equitable access to emerging technologies.

The Need for Continuous Evaluation and Adaptation

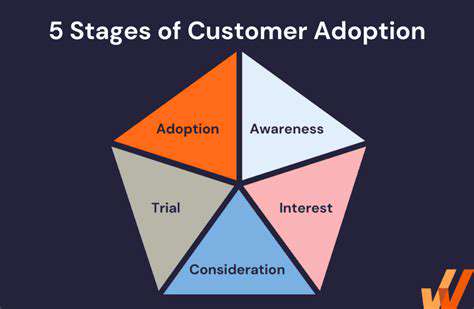

Ethical frameworks for autonomous vehicles can't remain static. As technology evolves and societal values shift, so too must our ethical guidelines. Regular real-world testing provides invaluable data for refining decision-making algorithms and addressing unforeseen scenarios.

An iterative approach ensures systems remain aligned with public expectations. Ongoing monitoring allows developers to identify and correct biases while adapting to new ethical challenges that emerge as the technology matures.

Beyond the Algorithm: Human Oversight and Liability

Ensuring Safety Through Human Intervention

Despite advanced automation, human oversight remains critical for autonomous vehicle safety. No algorithm can anticipate every possible scenario, necessitating well-designed mechanisms for human override. These systems must balance automation with human judgment, particularly in ambiguous or high-risk situations.

Effective human oversight requires comprehensive training programs. Operators need technical expertise and psychological preparedness to make sound decisions under pressure. Regular scenario-based drills can help maintain these critical skills while identifying areas for system improvement.

Establishing Clear Liability Frameworks

The legal landscape for autonomous vehicle accidents remains largely uncharted. Traditional liability models break down when machines make split-second decisions. New frameworks must clarify responsibilities across manufacturers, software developers, and vehicle operators - with provisions for evolving technology.

This legal evolution requires ongoing collaboration. Lawmakers must work closely with technologists to develop adaptive regulations that promote safety without stifling innovation. As capabilities advance, these frameworks will need periodic reassessment.

Addressing Ethical Dilemmas in Algorithmic Decision-Making

Programming ethical priorities into autonomous systems raises profound questions. How should algorithms value different lives in unavoidable accidents? These decisions require careful consideration of societal values, with input from diverse ethical perspectives.

Transparency in algorithmic design helps build public trust. Open discussions about programming choices can foster acceptance while identifying potential biases or unintended consequences before deployment.

The Future of Ethical Autonomous Vehicles: Promoting Transparency and Accountability

Ensuring Transparency in Decision-Making

Building public trust requires demystifying autonomous systems. Understandable explanations of algorithmic decisions, particularly in critical situations, can help users and regulators evaluate system performance. This transparency also facilitates identification of potential biases or flaws.

Documentation should extend beyond technical specifications. Clear communication about system capabilities and limitations helps set appropriate expectations while providing accountability when questions arise about vehicle behavior.

Developing Standardized Safety Protocols

Consistent safety standards across manufacturers benefit all stakeholders. Shared testing protocols for diverse conditions - from extreme weather to complex urban environments - help ensure baseline performance. Independent verification adds credibility to these assessments.

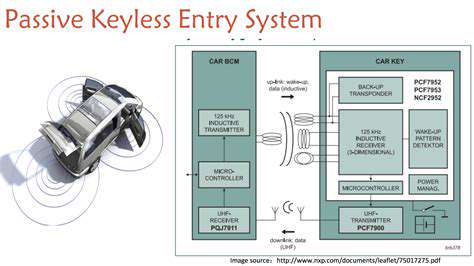

Standardization also supports interoperability. Common communication protocols between vehicles could enhance safety by enabling coordinated responses to potential hazards, creating a more resilient transportation ecosystem.